Table of Contents

Basic Architecture

SQL-Ledger is a web-based Perl program that interfaces with PostgreSQL using the relevant Perl modules. The code is well partitioned, and the main operation modules are written in an object oriented way.

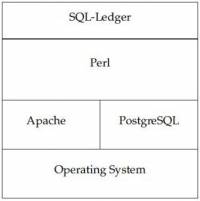

The Software Stack

Figure 8: The SQL-Ledger software stack in a Typical Implementation

SQL-Ledger runs in a Perl interpreter. I do not currently know if it is possible to run it with Perl2C or other language converters to run in other environments. However, except for high-capacity environments, Perl is a good language choice for this sort of program. SQL-Ledger used to support DB2 and Oracle as well as PostgreSQL. However, currently some of the functionality is implemented using PostgreSQL user-defined functions. These would need to be ported to other database managers in order to make the software work on these. It should not be too hard, but the fact that it has not been done yet may mean that there is no real demand for running the software under other RDBMS's. One can substitute other web servers for Apache. Normally SQL-Ledger is run as a CGI program but it may be possible to run it in the web server process (note that this may not be entirely thread-safe). The operating system can be any that supports a web server and Perl (since PostgreSQL need not run on the same system). However, there are a few issues running SQL-Ledger on Windows (most notably in trying to get Postscript documents to print properly). On the client side, any web-browser will work. Currently, the layout is different for Lynx (which doesn't support frames), and the layout is not really useful under eLinks (the replacement for Lynx which does support frames). Some functionality requires Javascript to work properly, though the application is usable without these features.

Capacity Planning

Some companies may ask how scalable SQL-Ledger is. In general, it is assumed that few companies are going to have a need for a high-concurrency accounting system. However, with all the features available in SQL-Ledger, the staff that may have access to some of the application may be high enough to make the question worthwhile. This question also becomes more important when companies might look at integrating SQL-Ledger with a CRM solution, online store, or other environment. This section looks at a number of the known issues and their solutions.

Scalability Strategies

As SQL-Ledger is a fairly standard web-based application. However, sometimes during upgrades, the database schema changes. In these cases, it becomes impossible to use different versions of the software against the same database version safely. SQL-Ledger checks the version of the database and if the version is higher than the version of the software that is running, will refuse to run. Therefore although one strategy might be to run several front-end web servers with SQL-Ledger, in reality this can be a bit of a problem. One solution is to take half of the front-end servers off-line while doing the initial upgrade, and then take the other offline to upgrade when these are brought back online. The database manager is less scalable in the sense that one cannot just add more database servers and expect to carry on as normal. However, aside from the known issues listed below, there are few performance issues with it. If complex reports are necessary, these can be moved to a replica database (perhaps using Slony-I). If this solution is insufficient for database scalability, one might be able to move staff who do not need real-time access to new entries onto a PG-Pool/Slony-I cluster where new transactions are entered on the master and other data is looked up on the replica. In certain circumstances, one can also offload a number of other queries from the master database in order to minimize the load. SQL-Ledger has very few issues in the scalability of the application.

Database Maintenance

PostgreSQL uses a technique called Multi-version Concurrency Control (MVCC) to provide a snapshot of the database at the beginning of a statement or transaction (depending on the transaction isolation level). When a row is updated, PostgreSQL leaves the old row in the database, and inserts a new version of that row into the table. Over time, unless those old rows are removed, performance can degrade as PostgreSQL has to search through all the old versions of the row in order to determine which one ought to be the current one. Due to the way the SQL statements are executed in SQL-Ledger, most inserts will also create a dead row. A second problem occurs in that each transaction is given a transaction id. These id's are numbered using 32-bit integers. If the transaction id wraps around (prior to 8.1), data from transactions that appear (due to the wraparound) to be in the future suddenly becomes inaccessible. This problem was corrected in PostgreSQL 8.1, where the database will refuse to accept new transactions if the transaction ID gets too close to a wraparound. So while the problem is not as serious in 8.1, the application merely becomes inaccessible rather than displaying apparent data loss. Wraparound would occur after about a billion transactions between all databases running on that instance of PostgreSQL. Prior to 8.1, the main way to prevent both these problems was to run a periodic vacuumdb command from cron (UNIX/Linux) or the task scheduler (Windows). In 8.1 or later, autovacuum capabilities are part of the back-end and can be configured with the database manager. See the PostgreSQL documentation for treatment of these subjects. In general, if performance appears to be slowly degrading, one should try to run vacuumdb -z from the shell in order to attempt to reclaim space and provide the planner with accurate information about the size and composition of the tables. If this fails, then one can go to other methods of determining the bottleneck and what to do about it.

Known issues

The PostgreSQL planner assumes a minimum page size of ten pages for a physically empty table. The reasoning behind this choice is that a table could grow rapidly and one could end up with bad database performance if the planner assumes a very small table. However, if you end up with joins between a very large table with millions of rows and a physically empty table, one can end up with a very bad query plan. In this case, the planner will choose a nested loop join and run through this loop for every row in the large table. As a result, performance will suddenly drop once the large table becomes too large to effectively do index scans of the join criteria on both tables. This problem most often occurs when people have no warehouses, departments, or projects defined and are running systems with a large number of transactions (such as a point of sale environment). Last time I saw this problem, the server would wait for thirty seconds to display a new point of sale screen while the server CPU activity would spike to 100%. One solution is to define one warehouse, department, and project, and then run vacuumdb -z from the shell to force the planner to acknowledge these tables as single-row tables. The other option is to go into the source code and edit the database queries to omit unused tables.

Next: Customization Possibilities

(First version from: An Introduction to SQL-Ledger by Chris Travers, 2006)